nstgc

Members-

Posts

22 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Everything posted by nstgc

-

That sure sucks. I know there lists of what drives have the MediaTek chipset that enables it, but there are surely others. Also, I have no clue how to check to see if mine has the required chip set. I have a Sony BWU-500S

-

Linux actually lets you mount both archives (in general) as well as ISOs. Its just a command line thing. Also, 7zip lets you open ISOs as if they were archives. They can't handle those with the UDF2.5 or higher filing system, but for DVDs or CDs, yeah, it can read them.

-

I've been reading around and apparently some PIPO scanning software (like the one from Nero) only works on certain drives. Does DVDinfoPro work for all drives? If not is there one that does?

-

Versus zip? ISO files contain an entire filing system, zip files do not.

-

Start the burning somewhere other than the beginning

nstgc replied to nstgc's topic in ImgBurn General

Is the table of contents also duplicated like the filing system? [edit] As for the slower read, I mean that it suddenly jumps from slow to what I would expect in a way that's reminiscent of damaged media. I don't think its the disks though since I have this problem when reading DVDs as well. The inside of my old DVDs, when read with this drive, were read very slowly and it was obvious that it was trying to read the sectors several times. Its very unusual for a disks to consistently have problems with the inner sectors. -

Start the burning somewhere other than the beginning

nstgc replied to nstgc's topic in ImgBurn General

I'm not having problems per se, but rather trying to avoid future problems. I haven't actually been unable to read that area, it just slows down significantly. So far I've burned 46 disks and haven't had a single coaster and the ones I burned back in January haven't rotted yet. But I feel a lot better knowing that there are two copies of the filing system so long as I am using UDF 2.50 (which I am). Does this extra copy of the data mean that the drive will be able to recognize the disk as having something on it ever if the beginning of the disk is screwed up. I had that problem with some DVD+Rs. I don't know if it was the filing system that was messed up or if there is some special section of the disk that says "hey I have data on me". This is of course important since if the disk isn't recognized as having data on it, DVDisaster is useless. -

I'm certain I remember this being mentioned somewhere, but I can't find an option that allows this in the settings, the guide (for settings), nor in the threads I thought I saw it mentioned. My drive seems to have problems reading the first few GB of a BD-R for some reason. Since the beginning few kB are the most important as they include the filing table and what not (without which the disk is empty), I would like to avoid burning to these sectors. I believe it is somehow linked to defect magement, but I'm not sure.

-

Is there a way to force ImgBurn to simply skip bad writes?

nstgc replied to nstgc's topic in ImgBurn Support

Yeah that's what made me think of it. Well thank you. -

in the event of a bad write (which I just tried simulated by scribbling over the back of a blank disk) it would be useful if it would continue to write on the sectors that are good. This is due to the fact that even a bad disk can be useful when trying to recover data from a good disk that has gone bad (data corruption is unlikely to occure in the same sector twice).

-

I haven't used Metro, but it looks like a mess. It looks fine for a tablet, but for a desktop...yeeee!

-

BD-R disks are cheaper per GB. For the same price you would create several identical copies plus error correction data. Also the BD-R I have seem to be sealed better than DVD(+/-)Rs that I've had. Better sealing should mean less rot.

-

Before I begin I'd like to adress all those people who will tell me about disk rot and how disks sometimes just can't be read. I have previously archived with DVD+R's and learned the hard way that disks decay over time and sometimes they simply won't read at all. Burnable media isn't as reliable as that which you find in commercially created disks. That is why I am making this topic to make sure that I don't have the same problem as last time. Pretty much I'm backing up a bunch of stuff. I'm a pack rat of sorts (or we can just call it what it is -- OCD/phobia developed from a period were I had a HDD failure every few months) and I like to reinstall my oporating systems frequently. Every 6 months or so I reinstall both Windows and Linux. I have a bunch of hard drives lying around, but I'd like to put those on BD-R's. Needless to say the amount that I need to back up is substantial (>2TB). I want to store it, as well as whatever comes after on BD-Rs for a long period of time with out having to worry about data loss. I currently am planning on employing two different methods to prevent data loss. Firstly, I'll be using DVDisaster to create ECC files with 64 roots (33.5% redundancy). I will store that on a separate BD-R which will also contain the ECC file for the previous disk with ECC data on it. Then I will take the ISOs of 6 disks (4 with data and 2 with ECC files) and create par2 files (using MultiPar) with 3000 blocks (since anything more would take DAYS to compute given that it will be computing it for ~150GB of data) and storing 1500 of those blocks on 3 BD-R (500 blocks per disk each in its own file). Each of these disks will be protected with a 32 root (14.7% redundancy) ECC file. 6 stored on a single BD-R plus one for the previous ECC containing disk. The ECC is suppose to take care of basic aging. For most disks this should be sufficient. However I know from experience that some disks age unexpectedly quickly and others can become damaged in such a way that they simply can't be read at all. This is what the Par2 data is for. It is to take care of those disks that age too quickly or become unreadable. It also is why its not necessary for them to have more than 500 blocks per disk. Its for those cases where the disk is seriously screwed up. Also if the par2 disks get too bad off, the files on them are sufficiently small that it should be possible to get enough blocks off of them to reconstruct one entire disk. I chose not to simply have duplicate disks (instead of par2 files) since it would result in a redundancy ratio of 2:1 (3 disks for every 1 data disk) instead of 5:4 with my currently implication. Also as disks get old and decay, I will be checking them and making duplicates anyway to replace the dying disks. I am worried about the par2 disks though since if one of them goes completely bad I'll likely have to completely redo all disks in that set (of 3). [edit] Thirdly, is there any brand of media that you guys would suggest. I am currently using Optical Quntum because its cheap, and so far the disks I've burned in January (7 of them) don't show signs of heavy decay. However if there is a better brand I'll switch to that.[/edit] Comments and suggestions are much needed? I also have a few questions. Firstly, does DVDisaster handle BD-R well? Secondly is there a respectable alternative to par2? Par2 takes a short eternity to compute. Ideally I would like to get rid of the two method system altogether and use only one, but with the need for large block sizes under par2 that isn't possible. tl;dr: I used to archive with DVD+Rs, that was a disaster. I'm trying again with BD-Rs and have a plan. If you don't want to read through my plan at least leaves some questions or coments that you find helpful or perhaps I can help you with. Thank you.

-

So it doesn't have any intended affect on the life of the disk, it just helps prevent coasters. Thank you.

-

Defect management? That sounds important since I'm trying to use DB-R's for archiving. I just did some Googling, but I haven't found anything really concrete as to what it does. The best I found is that ~5% of the disk is used in case of some sort of error in which case the data is remapped to that location. It doesn't seem like it should be like how a HDD will remap damaged sectors elsewhere though since BD-Rs are ROM, which I find desirable. That leads me to think it occurs during the actual burn process in case the media is screwy. I haven't had any coasters so far so if thats the case then it doesn't matter. What does the defect management do and how does it use these extra sectors?

-

Apparently there are 12219392 sectors on a BD-R (or at least mine by Optical Quntum) which, assuming 25GB and 2028 kB per sector, makes sense. However it seems as if we are only suppose to burn to 11826176 sectors of that. Is it okay to burn to all 12219392 sectors?

-

Were you using one of those silly operating systems like Windows of OS X? Try Linux, there may be something that is preventing you from burning the image of a game (Microsoft probably doesn't care what reason you have for burning one of their games, and will stop you even if you have legitimate intents...which I'm guess you don't.)

-

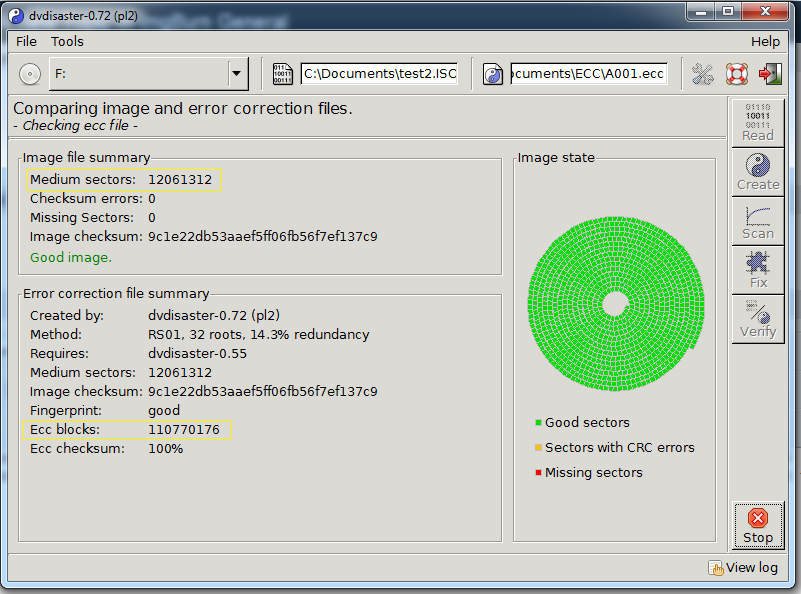

According to DVDisaster's FAQ under background information DVDisaster makes ECC blocks of 223 sectors under normal settings. Therefore, I would except there to be 1 EEC block for every 223 medium sectors rounded up. However, it seems as if thats not the case. Could anyone explain that? [edit] The thing to notice about the image is that there are more ECC blocks than there are sectors on the medium.

-

Can't burn the R02 ECC data from DVDisaster onto a DB

nstgc replied to nstgc's topic in ImgBurn Support

Thank you. I'm satisfied. I haven't checked to make sure that's the case yet, but I'm sure it is (I'll still check later). [edit] I checked and it does indeed match once I prune the padding. Thank you again. -

Can't burn the R02 ECC data from DVDisaster onto a DB

nstgc replied to nstgc's topic in ImgBurn Support

So you are saying that sort of "error" is normal, to be expected, and harmless? -

Can't burn the R02 ECC data from DVDisaster onto a DB

nstgc replied to nstgc's topic in ImgBurn Support

I checked the ISO, and it is the proper size. Also I ran DVDisaster's verification on the augmented ISO and DVDisaster detected the ECC data embedded into the ISO. [edit] I used DVDisaster to rip the disk since that's what the guide said to do. Also, from a physical examination of the disk, its clearly not filled. I'm currently ripping it with ImgBurn and will update this post with the log. [edit2] I attached the log file for the ripping. Also I checked the ISO created using ImgBurn, and it has the ECC (which is shocking). However, the ECC is wrong or something. ImgBurn2.log dvdisaster.log -

Can't burn the R02 ECC data from DVDisaster onto a DB

nstgc replied to nstgc's topic in ImgBurn Support

What do you mean? Are you saying the ECC wasn't present in the original ISO I burned from? If that is what you are saying, I've already addressed that possibility in my opening post -- the size of the ISO I burned and the ISO I made from the burned disk don't match. [edit] The ISO I ripped from the BD I burned is about 1.5 GB smaller than the ISO to the disk. -

I am trying to make BD augmented with R02 ECC data created with DVDiseaster. I first made the ISO using ImgBrun, then made the augmented ISO, then I burned the augmented ISO to disk with ImgBurn. However, I followed this guide to checking to ensure the data was implemented properly and it wasn't. As a further check, the resulting ISO image (from the read step) is too small to have the extra ECC data that I thought was going to be on there. Does anyone know if or how to get it to work? [edit] Sorry, forgot the log file. ImgBurn.log