-

Posts

30,522 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Everything posted by LIGHTNING UK!

-

Trojan-dropper.win32.agent.blk in ImgBurn !!!

LIGHTNING UK! replied to Wigman's topic in ImgBurn General

Cool, glad to hear it. -

Trojan-dropper.win32.agent.blk in ImgBurn !!!

LIGHTNING UK! replied to Wigman's topic in ImgBurn General

Might be a problem with UPX v3 compressed programs then - which both the ImgBurn ones are. -

Ok, I've added another 'fallback' search to determine the image file format if the other attempts fail. It now loads the image ok, but will only work properly if the image is a 'basic' one (i.e. single session, single track). If it's not, it'll be burnt as one anyway.

-

Trojan-dropper.win32.agent.blk in ImgBurn !!!

LIGHTNING UK! replied to Wigman's topic in ImgBurn General

What does it find it in, the installation program or ImgBurn.exe itself? The NSIS installer I use always has these issues! Either way, both are free from viruses so please report this 'false positive' to Kasperski. -

The file is in their own proprietary format with loads of rubbish stuck on the front of it.

-

Get some other discs, your drive doesn't like those. Verbatim (MCC dye), or Taiyo Yuden are the ones we recommend.

-

email it to me. Look in the program's 'About' box for the address. Ta.

-

Well, you could use a hex editor - something like hexworkshop. Or if you can use the command prompt (cmd.exe), use this: http://download.imgburn.com/grab5m.zip Usage : GRAB5M i.e. Extract to a folder called 'grab5m' in the root of c: Open a command prompt window. Change the grab5m folder by typing: CD \grab5m Then type: grab5m "c:\my_odd_img_file.img" "c:\5mb_of_image.img" Zip/RAR up the '5mb_of_image.img' file and email it to me.

-

BEFORE: I 22:06:05 ImgBurn Version 2.3.2.0 started!I 22:06:05 Microsoft Windows Server 2003, Standard Edition (5.2, Build 3790 : Service Pack 2) I 22:06:05 Total Physical Memory: 2,094,704 KB - Available: 290,000 KB I 22:06:05 Initialising SPTI... I 22:06:05 Searching for SCSI / ATAPI devices... I 22:06:05 Found 2 DVD-ROMs, 2 DVD

-

They must be weird img files. Send me the first 5MB of one of them and i'll see what I can do.

-

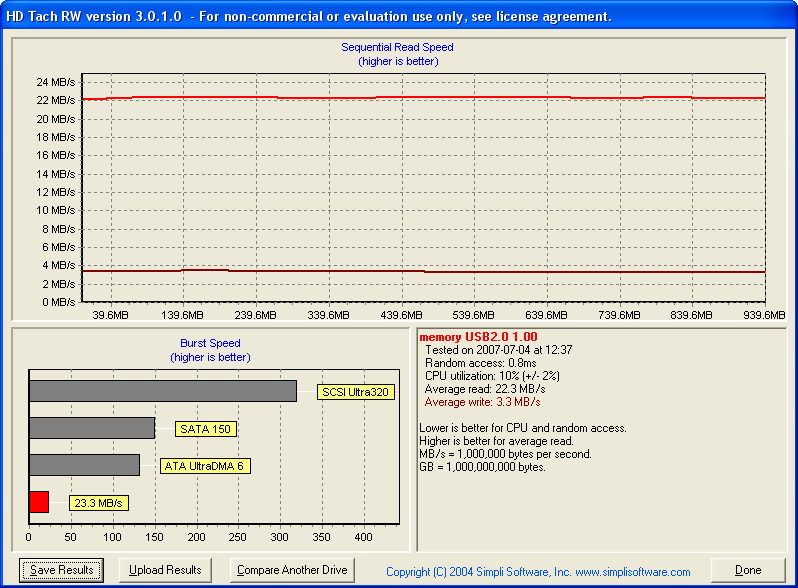

i formatted mine to ntfs manually using the command prompt format g: /fs:ntfs You need to change it to 'optimise for performance' in device manager first though before it'll let you. But it's not worth messing around with really as the fix I have now works for all filesystems - you just have to bypass the OS buffering using an extra flag in the 'CreateFile' api. Trouble is that you then can't write randomly sized amounts of data to the file, it always has to be a multiple of the sector size.

-

I've sussed it now bit it's going to take some tinkering to make sure i don't break other stuff!

-

No book type selections for Pioneer burners?

LIGHTNING UK! replied to LostHighway's topic in ImgBurn Support

The Welcome tab is just a welcome, it doesn't do anything! It simply tells you to press 'ok' in the sense that it closes the window and stops you messing about with things you don't understand. In any case, booktype stuff is automatic on Pioneer drives, there's nothing the software can do. -

It doesn't apply to Read mode. By definition, it produces an exact copy of the original disc. So if the gaps were there in the first place, they'll still be there in the ISO. If they weren't there, they won't be.

-

Turn off 'auto calculate', then it won't prompt each time. Just click the button when you're done.

-

Hmm I see the problem but don't know of a way around it. Firstly, unless the drive uses NTFS, the files aren't pre-allocated (It takes ages on FAT / FAT32 (compared to NTFS) so I avoided it). That basically means that for every 'Write' operation that extends the lengh of the file, the file system gets updated (by windows) to reflect that change. That of course means there's a lot of random accessing going on, and USB memory sticks are very slow in that sense. I can get rid of that overhead by pre-allocating on all filesystems. Ok, so once that's implemented (very easy), the whole thing is a bit faster. The next issue is that for some VERY odd reason, windows decided to write to the drive twice. I send my data in chunks of 65536 bytes. I can see windows writing the data physically to the drive using the same transfer length, but it's issuing two commands for the same data, and to the same LBA. One is for 65536 bytes, the other is for 4096. Again, I've *NO* idea why it's doing that - and it is again introducing 'random access' into the write equation meaning it can never be quick. I'll keep digging / playing

-

HDDs working and Optical drives working are two VERY different things! Lots of IDE/SATA controllers won't support Optical drives, or at least not very well. Unless it's on the chipset one (which it actually sounds like yours is), there are normally problems with using them. So assuming yours is connected to the proper nvidia controller and not any 3rd party addon chipset one, the only drivers you need to check for are the nvidia ones. Some say the default Microsoft drivers work best, other say the latest nvidia nforce ones do. So basically, visit nvidia.com and download + install them. If it doesn't work, revert to the Microsoft driver.

-

You just had a 'Write Error'. Check for firmware updates for the drive and buy some better quality disc. We only recommend Verbatim (MCC dye) and Taiyo Yuden.

-

Right, yeah, it's misreporting the disc capacity. The 'Get Capacity' command is returning '605' (last sector written on disc), and so when starting from 0, that gives us a readable region of 0 - 605 = 606 sectors. This is confirmed in the TOC info But then there's some odd looking gap after the track and before the leadout that wouldn't normally be there. Hence that's where your 2 sectors are going missing. I assume you've tried burning to a read CD-R rather than a CD-RW?

-

Out of interest, is it slow if you build hdd to usb or just usb to usb? What if you go usb to hdd, is that slow? Actually, thinking about it, I don't suppose write cache is enabled for those usb devices and I pretty much deal in sectors within the program. I guess that would bring about a lot of overhead if the OS isn't caching it and then doing 1 larger 'write' operation. When I get a minute I'll look into it a bit more closely.

-

It will only take off the random bytes at the end, not a full sectors worth. For instance, I just built a test iso from a random file on my desktop, it's size came out to: I 23:14:25 Image Size: 1,245,184 bytes I then used hexworkshop to insert 5 bytes on the end of it. Explorer then shows the size as: 1.18 MB (1,245,189 bytes) Once loaded into ImgBurn's Write mode, the size is still shown as: 1,245,184. ONLY those 5 bytes have been ignored.

-

You're not really giving us anything to go on! Tell us the specifics! How big is the image going by the file properties in Explorer? How big does ImgBurn think it is once loaded in Write mode? How big is it once burnt and looked at in Read mode (look in info panel on the right) ? Just copy + paste everything from the info panel and log window (after burning / reading). Screenshots help too. As for ASPI not working, it works fine. If you have SPTD installed (part of DAEMON Tools / Alcohol), ensure you're running v1.50.